- New report published today by the Digital Futures Commission uncovers how digital classrooms – including Google Classroom and ClassDojo – are failing to comply with data protection regulation and leaving school children vulnerable to commercial exploitation as a consequence.

- These new findings come as the Danish government has banned schools from using Chromebooks and Google Workspace for Education in their Helsingør Municipality.

- The report authors call for immediate action from the UK Government, working with regulators, EdTech companies and civil society, to stop data processing that gambles with children’s privacy, safety and future prospects.

Wednesday 31st August, London: A new report published today by the Digital Futures Commission, Problems with data governance in UK schools: the cases of Google Classroom and ClassDojo, warns that educational technologies like Google Classroom are likely putting children at risk of commercial exploitation.

There has been an explosion in the use of educational technology (EdTech) in UK schools in recent years, compounded by the need for remote education provision spurred on by the COVID-19 pandemic. It is estimated that the EdTech sector is already worth £3-4bn to the UK1, with Government keen to further develop economic growth in this sector.

In 2021 alone, Google Classroom was downloaded almost 1.34 million times in the UK, and ClassDojo was downloaded 849,000 times, making them among the most used educational apps2. A 2022 Digital Futures Commission survey also found that nearly 9 in 10 UK children used at least one of the 11 EdTech products they were asked about.

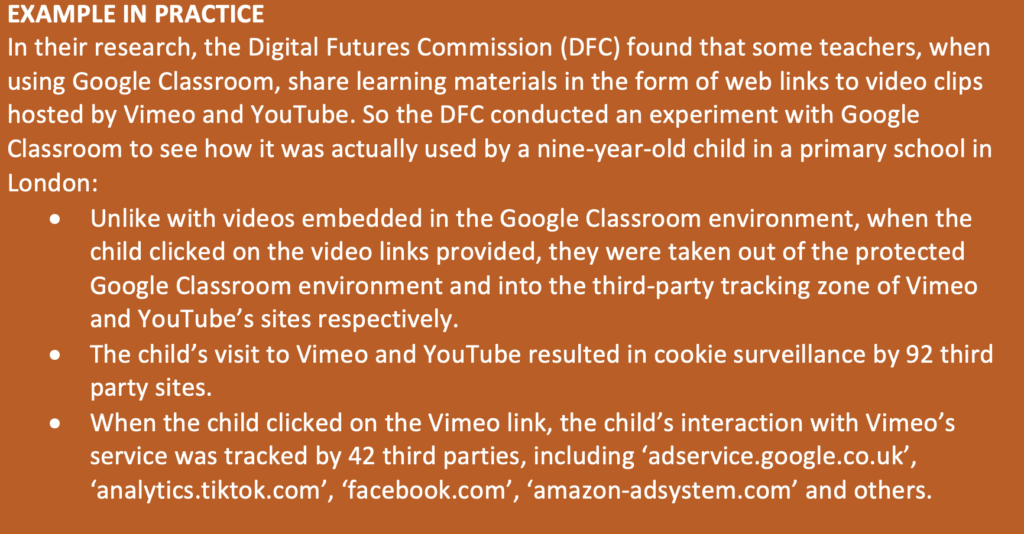

This report by the Digital Futures Commission reveals that Google and ClassDojo are collecting children’s educational data and processing and profiting from that data for advertising and other commercial purposes – often in ways that are likely non-compliant with data protection.

“Children’s learning shouldn’t be the target of profit-driven exercises by powerful tech companies. Nor should parents and teachers be put in the position of forfeiting either children’s data or valuable online learning tools because Government and EdTech companies collectively fail to make EdTech treat children fairly. We have found that it’s impossible for schools, parents, children – or even lawyers – to find out what data are collected from children and what happens to it.”

Sonia Livingstone OBE, report co-author, Research Lead at the Digital Futures Commission, and Professor of Social Psychology at LSE

The UK Government and regulators have yet to match their European counterparts with fit-for-purpose risk mitigation measures. And as a result, British children using digital tools in the classroom are vulnerable to their data being co-opted by global companies against their best interests – potentially resulting in data breaches, further monetisation and long-term consequences for children’s prospects, given the increasing use of automated processing in the workplace, universities and insurance.

“What is happening in our schools is no less impactful on children than in any other environment. The processes and practice of digital technology used in schools is deliberately, seamlessly and permanently extracting children’s data for commercial purposes – purposes that may not be aligned with the best interest of the child, the broader education community or indeed the expectations of society more broadly. EdTech is neither good nor bad, and nothing in this report prevents the innovation and spread of technology that benefits children and their knowledge or wellbeing. Like most things digital, it is simply a question of uses and abuses.”

Baroness Beeban Kidron, Founder of 5Rights Foundation and Commissioner at the DFC

As it stands, the responsibility for these invasions of children’s privacy is placed upon schools, parents, and even children themselves. But the corporate power of EdTech, its ethos of data maximisation, and commercially-motivated policies and designs place a near-impossible burden on anyone wanting to manage how data processed from children are used.

The report concludes that without immediate, joined-up action from Government, regulators and EdTech companies, children’s data and privacy will be put at even greater risk. The authors include clear recommendations to ensure that commercial interests do not trump children’s best interests – something we have seen from Big Tech for far too long already.

References

1 Department for Education (DfE) (2019), Walters (2021); see also DCMS (2021)

2 Clark, D. (2022b). Most downloaded educational mobile apps in the UK 2021. Statista. Retrieved 30 June 2022 from https://www.statista.com/statistics/1266710/uk-most-downloaded-education-apps

NOTES TO EDITORS

In this report we define ‘education data’ as the personal data collected from children through their participation in school for purposes of teaching, learning and assessment, safeguarding and administration purposes. For the past year, a team of lawyers and social scientists have consulted widely with experts and end-users, including schools and children, during the research.

The report is organised around the identification of 4 problems and matching recommendations:

- Problem 1: It is near-impossible to discover what data is collected by EdTech. We recommend that government takes steps to ensure transparency and accountability for processing children’s education data.

- Problem 2: EdTech profits from children’s data while they learn. We recommend that government takes steps to ensure commercial interests in education data do not undermine children’s education and best interests.

- Problem 3: EdTech’s privacy policies and/or legal terms do not comply with data protection regulation. We recommend that government takes steps to ensure that EdTech provides transparent privacy policies and legal terms for their processing of children’s education data in compliance with data protection laws.

- Problem 4: Regulation gives schools the responsibility but not the power to control EdTech data processing. We recommend that government takes steps to facilitate and coordinate rights- respecting contracts between schools and EdTech providers.

In preparing this report, we asked questions about EdTech to a nationally representative sample of 1014 children aged 7-to 16-year-olds as part of Family Kids & Youth’s digital wellbeing panel survey in the summer 2022. We found that:

- 33% had been asked by their school to use Google Classroom this year (34% primary and 32% secondary pupils).

- 18% had been asked by their school to use ClassDojo this year (27% primary and 9% secondary pupils).

- Only one in ten, or even fewer when it comes to sensitive data, thought it acceptable for the apps they use at school “to share information about you and your classmates with other companies”.

- Less than a third said their school had talked to them about why it uses technology for teaching and learning, and even fewer reported being told about who their education data was shared with or their data rights.

Contact

For any press enquiries, please contact media@5rightsfoundation.com.

For any questions relating to the work of the Digital Futures Commission, please get in touch here.